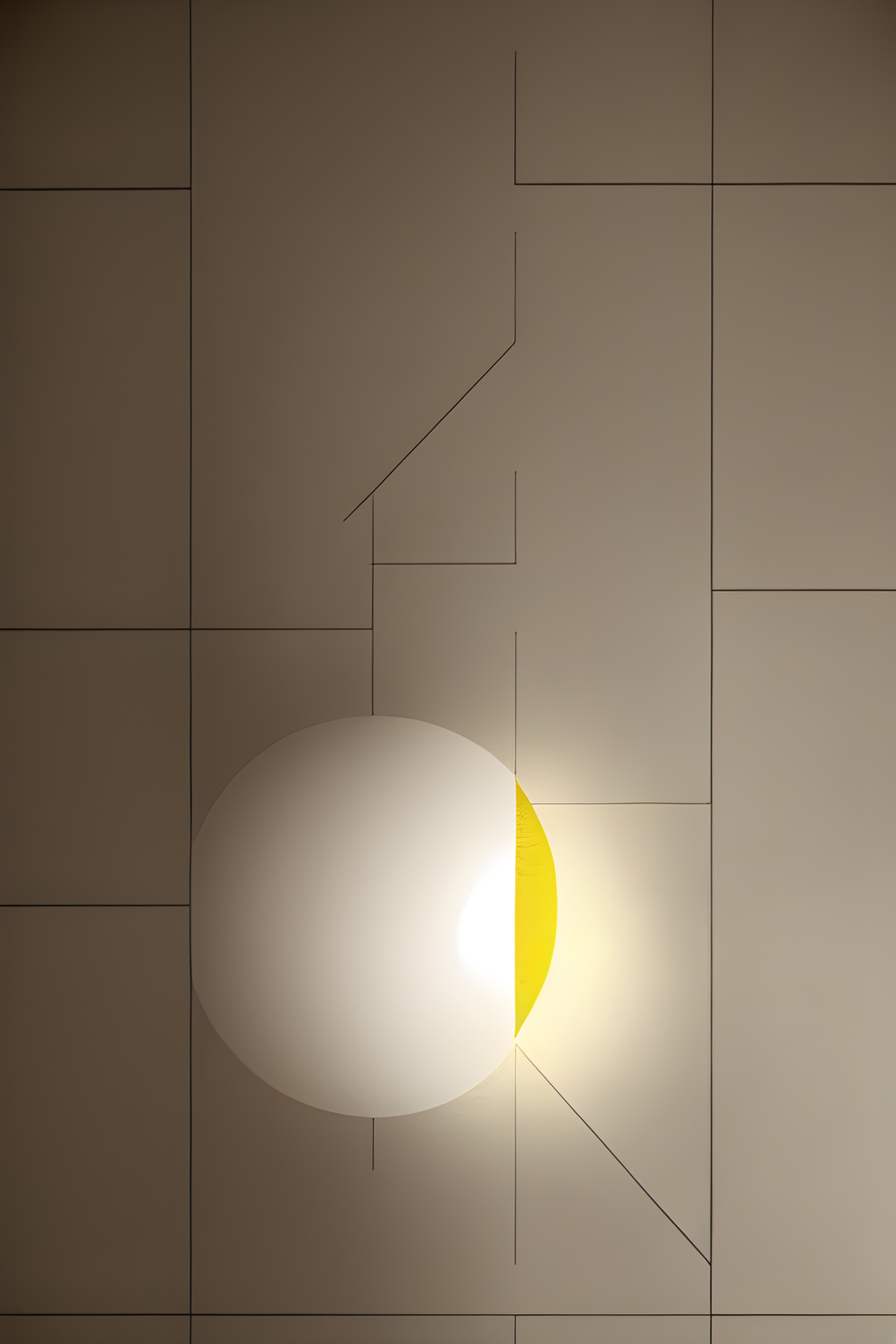

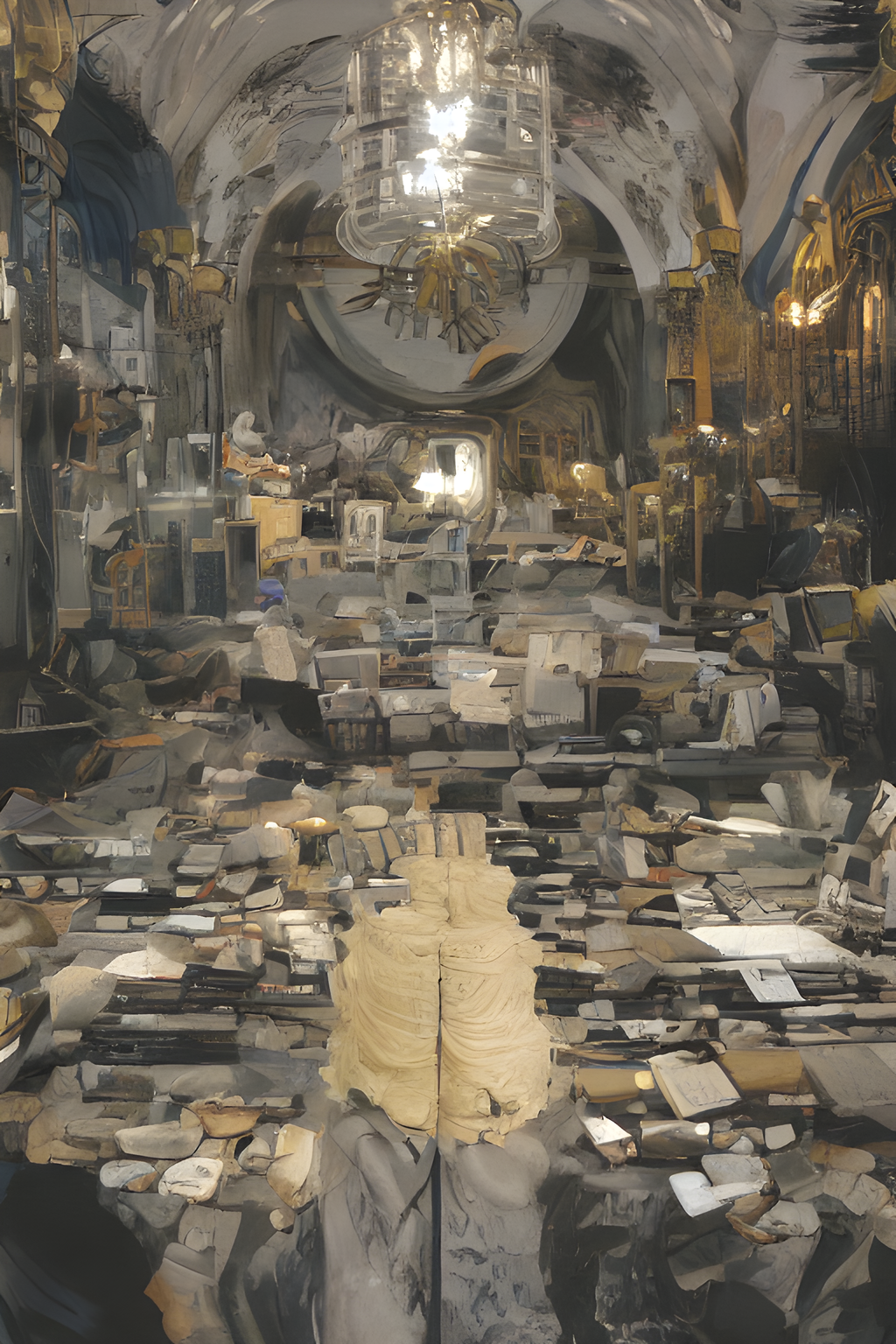

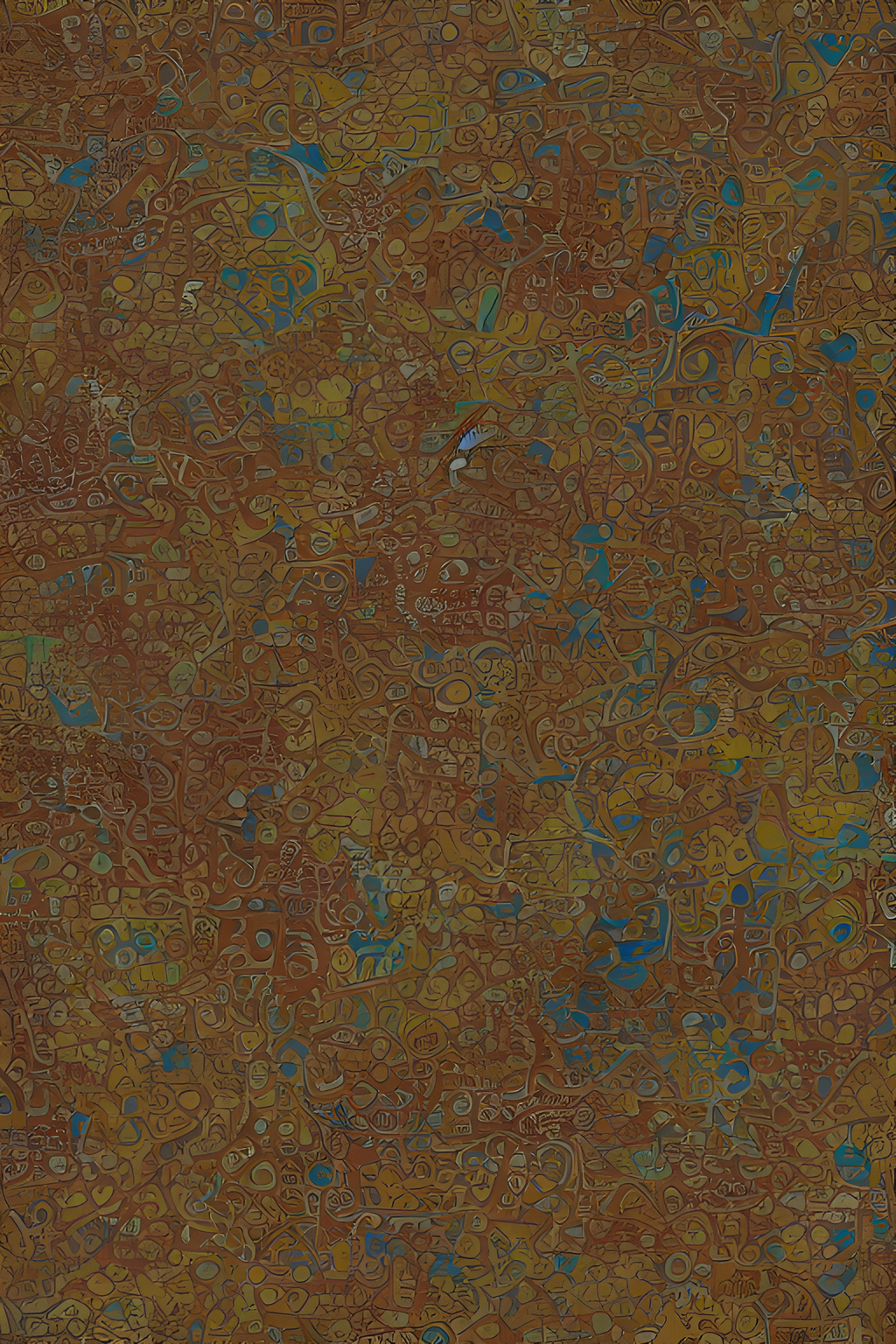

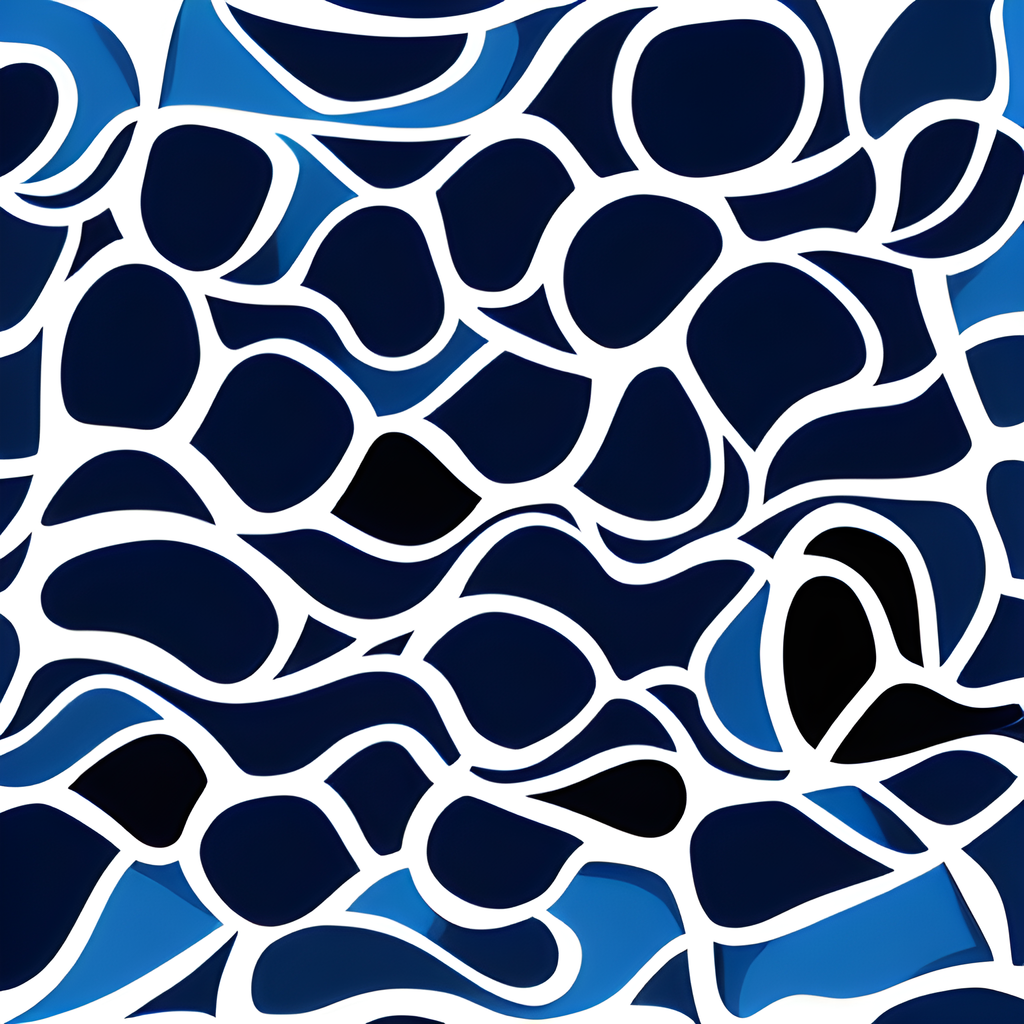

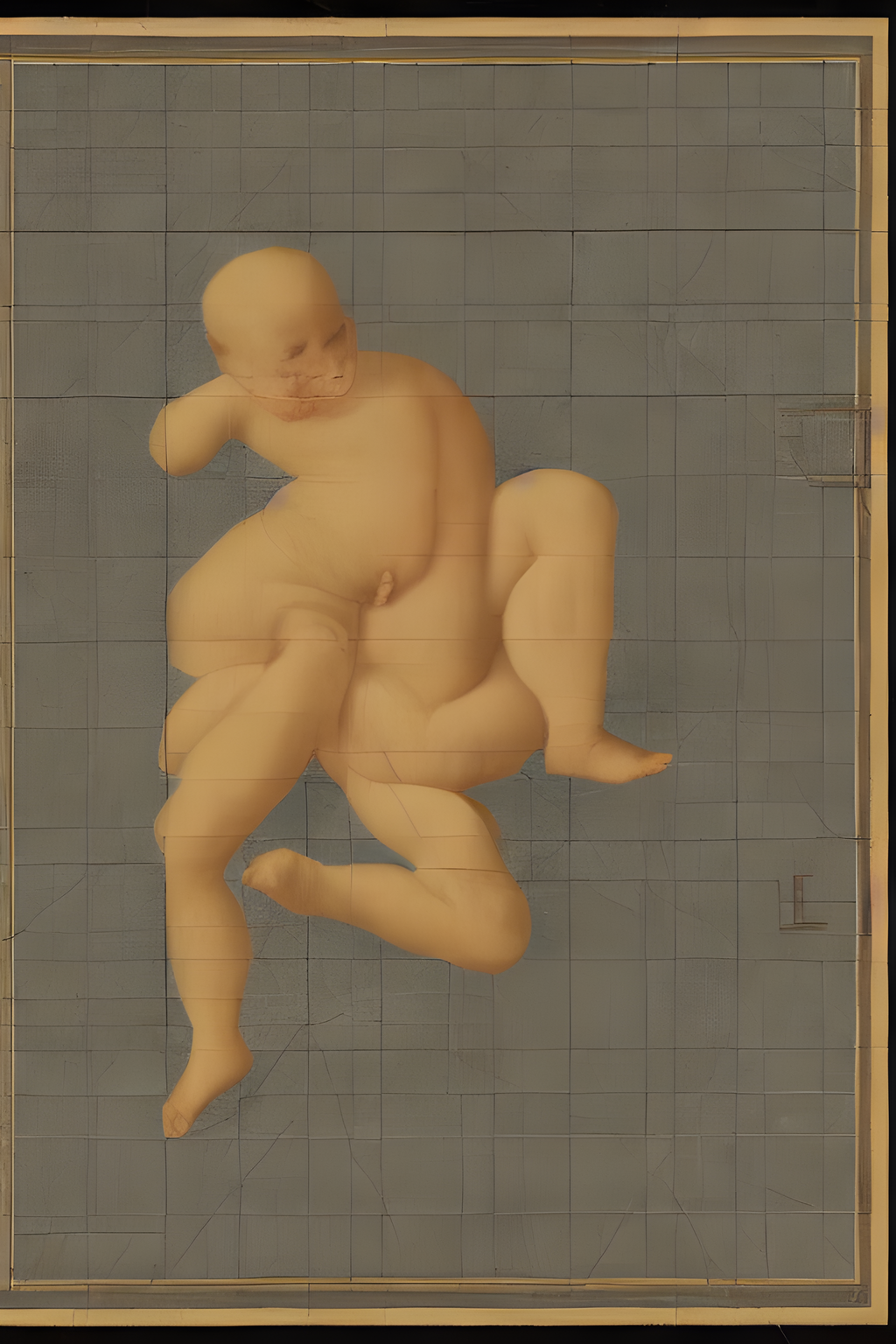

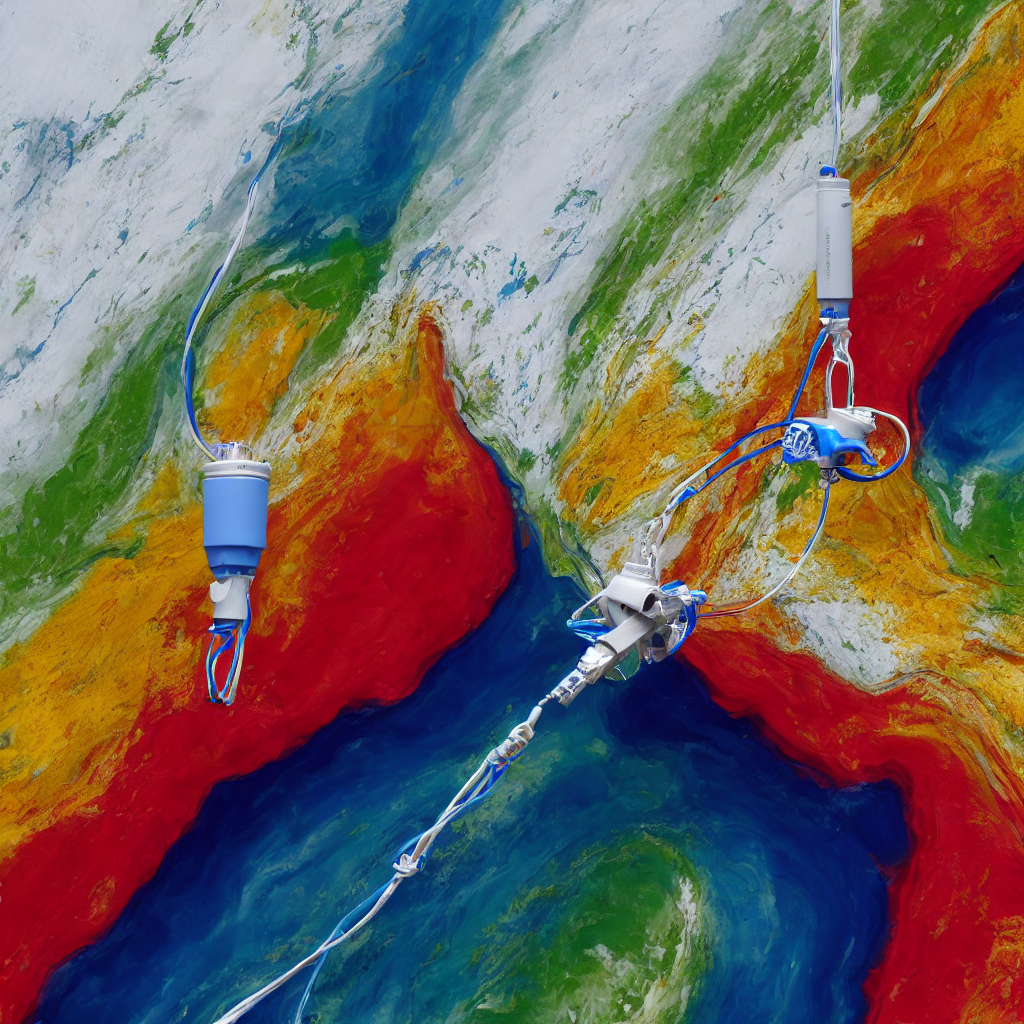

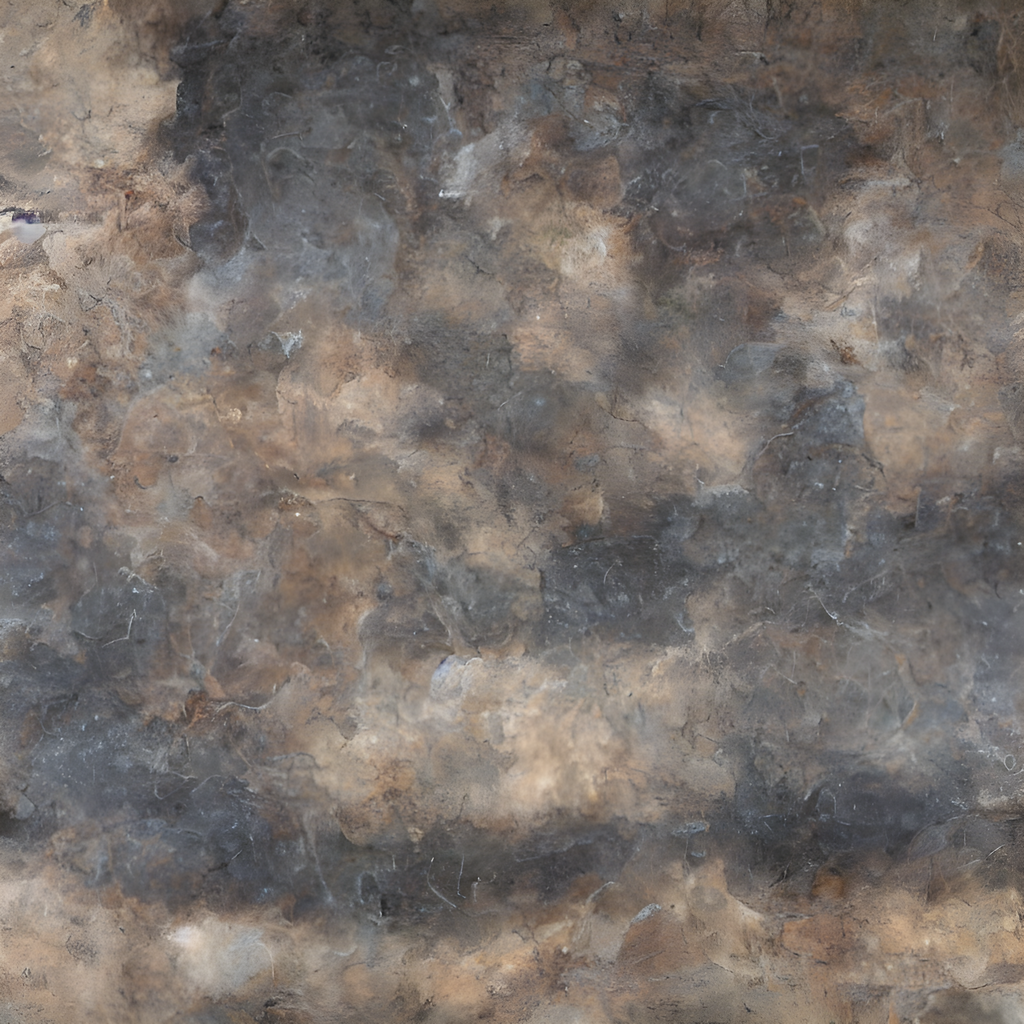

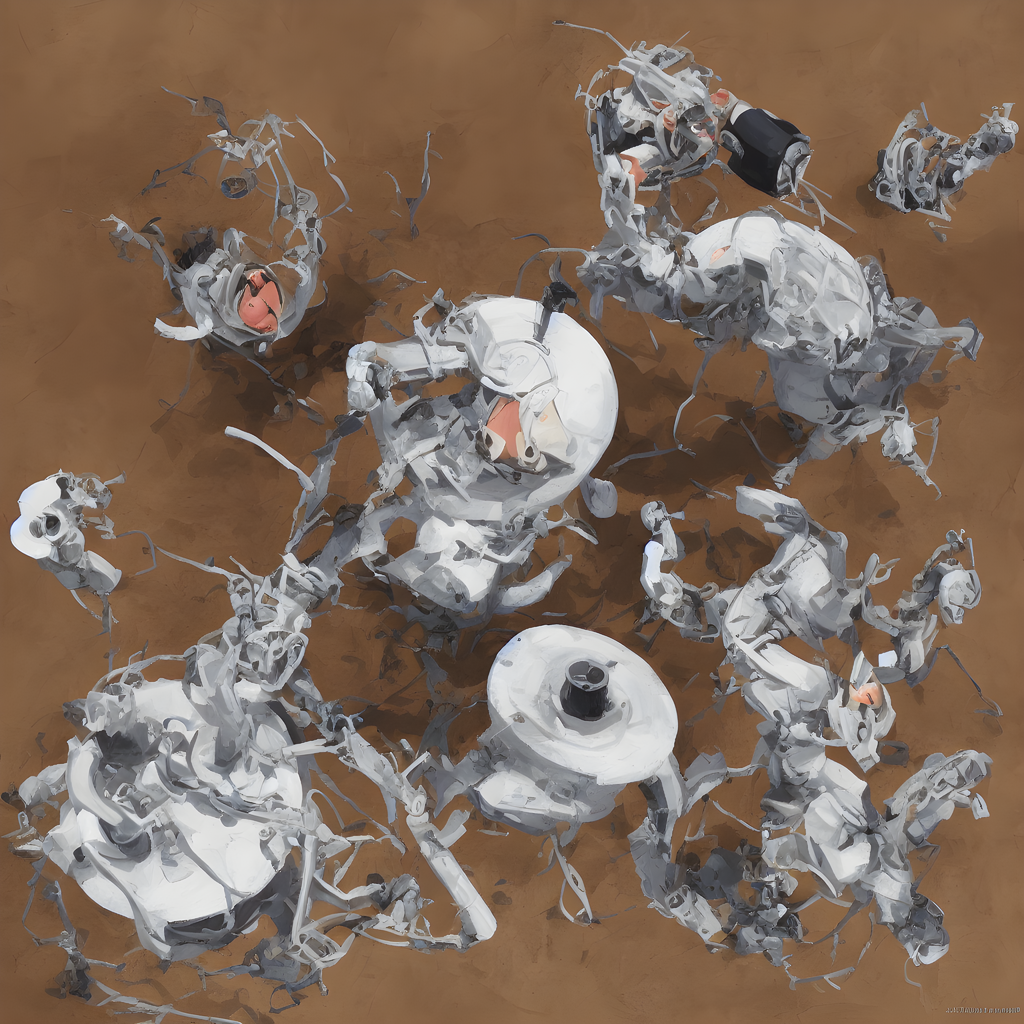

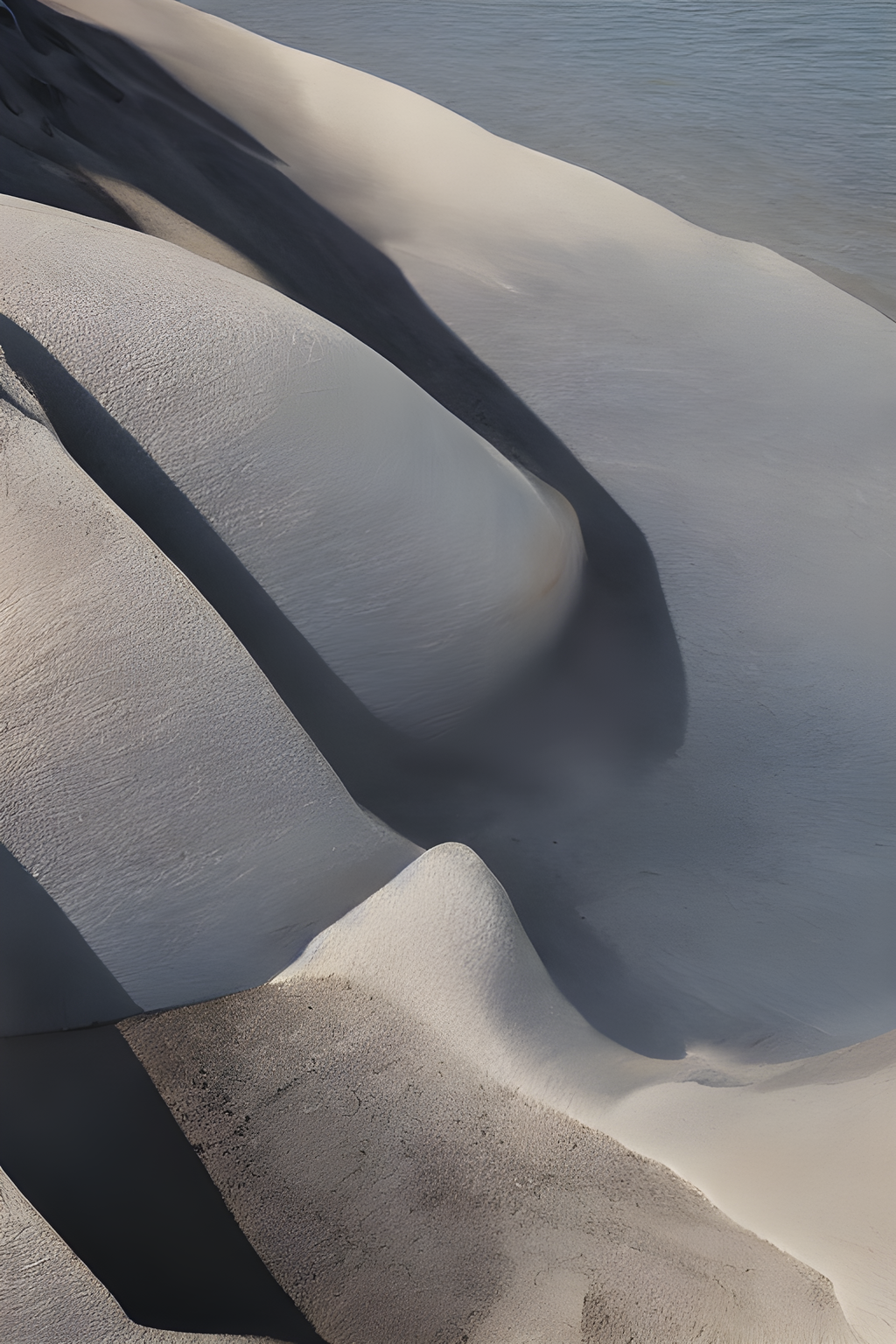

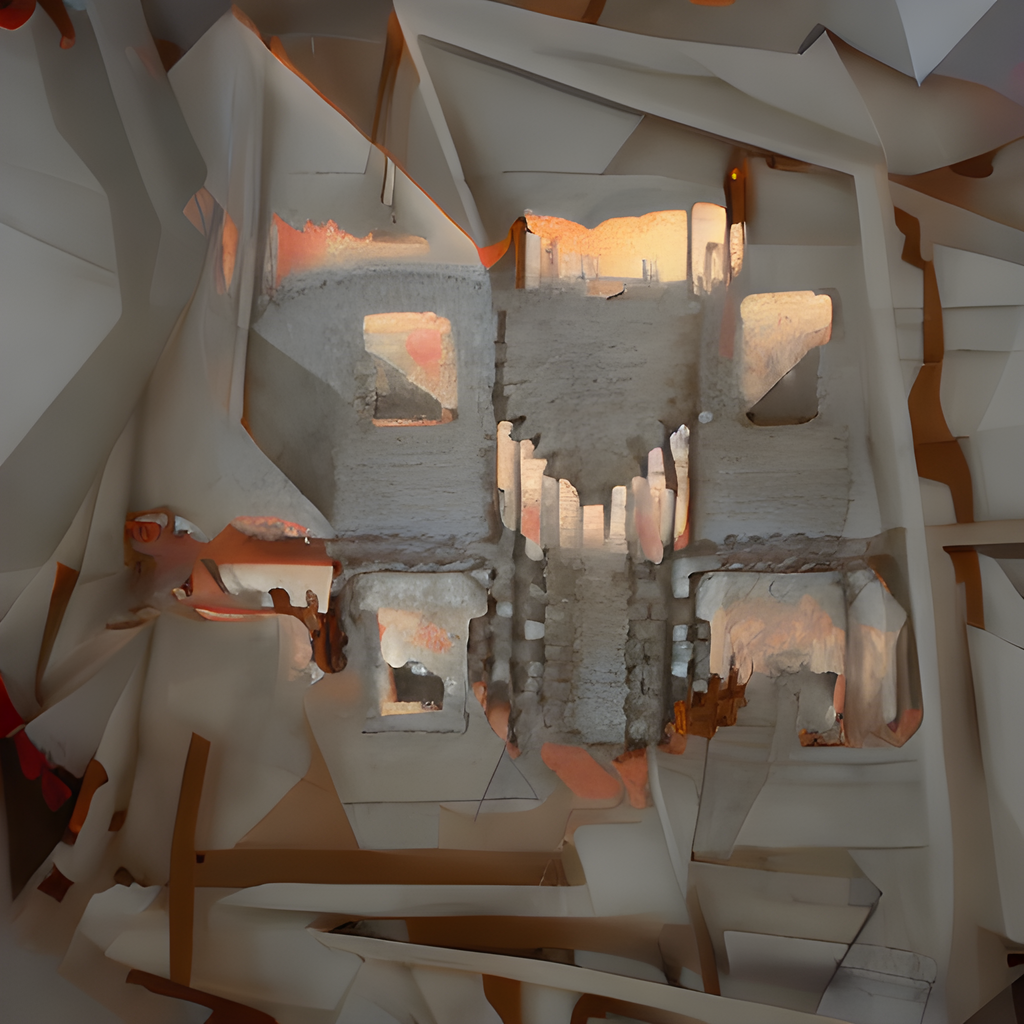

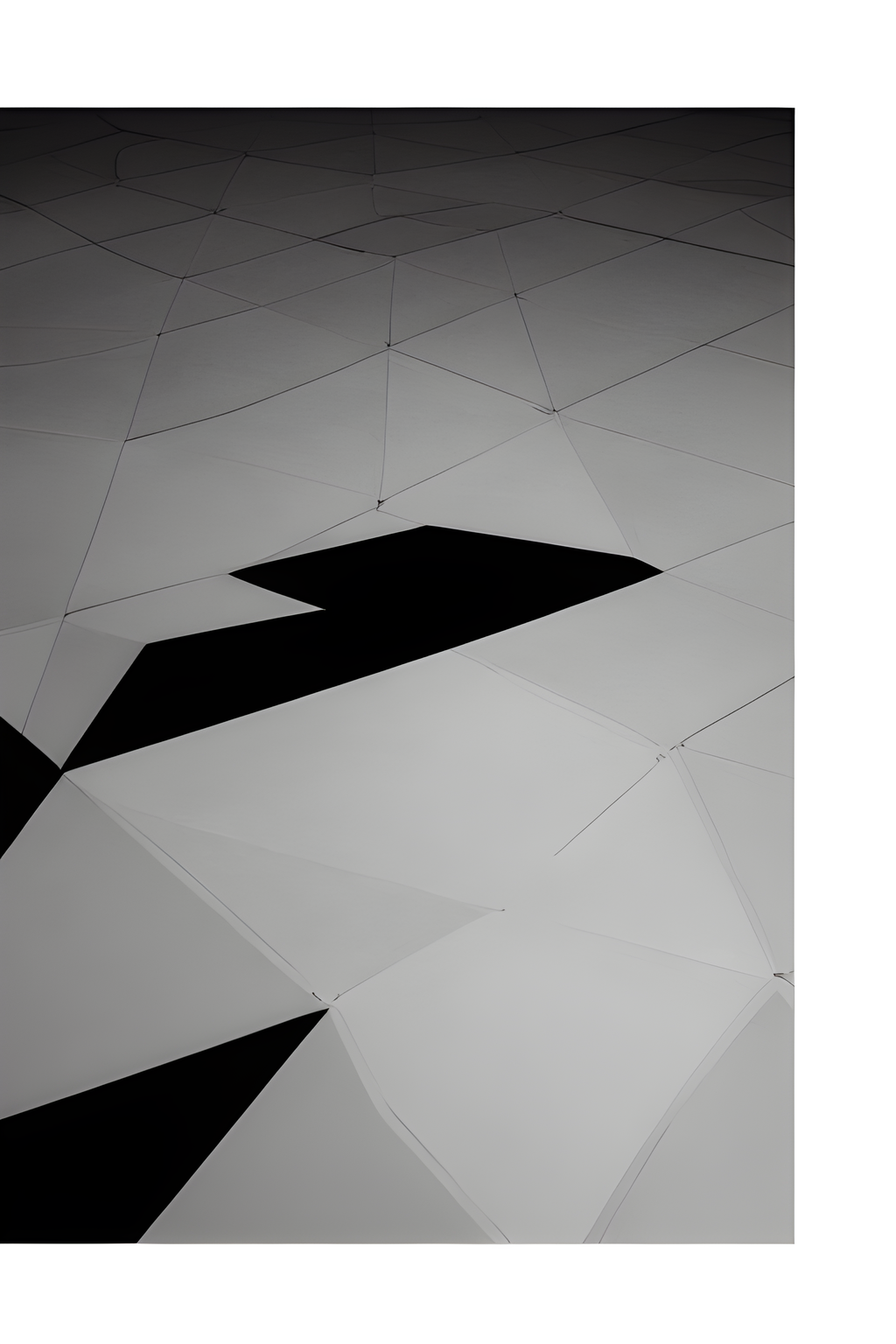

Stable Diffusion experiments

Before christmas I finally installed the Stable Diffusion text-to-image model on my local machine. This post features my favourite images from a couple of afternoons spent playing around with it.

Click through to see them all, and a few thoughts on the AI art debate at the bottom - the page might load slowly if you’re not on decent wifi.

The best rewards in machine art come from abusing the system and trying to make it do things it isn’t really capable of, or wasn’t designed for. In these images I used a lot of low guidance values, as well as larger output dimensions that are not typically recommended for producing coherent images. These settings were fed with intentionally vague prompts, and a typical process of seed farming and iterative tuning was applied.

Much has been made of the status or otherwise of AI-generated images as art, or as plagiarism, theft and so on. It’s certainly one of the most striking things about using SD that it can’t really have original ideas. Even when trying to be vague in what you ask of it, or allowing it to pay less attention to the prompts, everything it produces ultimately looks like something you’ve seen before, somewhere. The most you can do is allow it to roam more or less freely through the collective unconscious to find what it brings back to you.

This effect is complemented by the tendency to assume that novel-seeming work actually represents a new idea, as opposed to merely being influenced by something that you personally are unaware of. Previously this might simply lead to inflated admiration for artists with wider reference points than you, or failure to acknowledge your own influences. Now it’s possible to rip off people whose work you’ve never been exposed to, even third hand.

There’s much to be said about the specific character of some of these pictures - a friend described them as looking “like the sound of grinding metal” - but that will have to wait for another post.